Apache Beam Programming Guide

The Beam Programming Guide is intended for Beam users who want to use the Beam SDKs to create data processing pipelines. It provides guidance for using the Beam SDK classes to build and test your pipeline. The programming guide is not intended as an exhaustive reference, but as a language-agnostic, high-level guide to programmatically building your Beam pipeline. As the programming guide is filled out, the text will include code samples in multiple languages to help illustrate how to implement Beam concepts in your pipelines.

If you want a brief introduction to Beam’s basic concepts before reading the programming guide, take a look at the Basics of the Beam model page.

- Java SDK

- Python SDK

- Go SDK

- TypeScript SDK

- Yaml API

The Python SDK supports Python 3.8, 3.9, 3.10, 3.11, and 3.12.

The Go SDK supports Go v1.20+.

The Typescript SDK supports Node v16+ and is still experimental.

YAML is supported as of Beam 2.52, but is under active development and the most recent SDK is advised.

1. Overview

To use Beam, you need to first create a driver program using the classes in one of the Beam SDKs. Your driver program defines your pipeline, including all of the inputs, transforms, and outputs; it also sets execution options for your pipeline (typically passed in using command-line options). These include the Pipeline Runner, which, in turn, determines what back-end your pipeline will run on.

The Beam SDKs provide a number of abstractions that simplify the mechanics of large-scale distributed data processing. The same Beam abstractions work with both batch and streaming data sources. When you create your Beam pipeline, you can think about your data processing task in terms of these abstractions. They include:

Pipeline: APipelineencapsulates your entire data processing task, from start to finish. This includes reading input data, transforming that data, and writing output data. All Beam driver programs must create aPipeline. When you create thePipeline, you must also specify the execution options that tell thePipelinewhere and how to run.PCollection: APCollectionrepresents a distributed data set that your Beam pipeline operates on. The data set can be bounded, meaning it comes from a fixed source like a file, or unbounded, meaning it comes from a continuously updating source via a subscription or other mechanism. Your pipeline typically creates an initialPCollectionby reading data from an external data source, but you can also create aPCollectionfrom in-memory data within your driver program. From there,PCollections are the inputs and outputs for each step in your pipeline.PTransform: APTransformrepresents a data processing operation, or a step, in your pipeline. EveryPTransformtakes one or morePCollectionobjects as input, performs a processing function that you provide on the elements of thatPCollection, and produces zero or more outputPCollectionobjects.

Scope: The Go SDK has an explicit scope variable used to build aPipeline. APipelinecan return it’s root scope with theRoot()method. The scope variable is passed toPTransformfunctions to place them in thePipelinethat owns theScope.

- I/O transforms: Beam comes with a number of “IOs” - library

PTransforms that read or write data to various external storage systems.

A typical Beam driver program works as follows:

- Create a

Pipelineobject and set the pipeline execution options, including the Pipeline Runner. - Create an initial

PCollectionfor pipeline data, either using the IOs to read data from an external storage system, or using aCreatetransform to build aPCollectionfrom in-memory data. - Apply

PTransforms to eachPCollection. Transforms can change, filter, group, analyze, or otherwise process the elements in aPCollection. A transform creates a new outputPCollectionwithout modifying the input collection. A typical pipeline applies subsequent transforms to each new outputPCollectionin turn until processing is complete. However, note that a pipeline does not have to be a single straight line of transforms applied one after another: think ofPCollections as variables andPTransforms as functions applied to these variables: the shape of the pipeline can be an arbitrarily complex processing graph. - Use IOs to write the final, transformed

PCollection(s) to an external source. - Run the pipeline using the designated Pipeline Runner.

When you run your Beam driver program, the Pipeline Runner that you designate

constructs a workflow graph of your pipeline based on the PCollection

objects you’ve created and transforms that you’ve applied. That graph is then

executed using the appropriate distributed processing back-end, becoming an

asynchronous “job” (or equivalent) on that back-end.

2. Creating a pipeline

The Pipeline abstraction encapsulates all the data and steps in your data

processing task. Your Beam driver program typically starts by constructing a

Pipeline

Pipeline

Pipeline

object, and then using that object as the basis for creating the pipeline’s data

sets as PCollections and its operations as Transforms.

To use Beam, your driver program must first create an instance of the Beam SDK

class Pipeline (typically in the main() function). When you create your

Pipeline, you’ll also need to set some configuration options. You can set

your pipeline’s configuration options programmatically, but it’s often easier to

set the options ahead of time (or read them from the command line) and pass them

to the Pipeline object when you create the object.

For a more in-depth tutorial on creating basic pipelines in the Python SDK, please read and work through this colab notebook.

2.1. Configuring pipeline options

Use the pipeline options to configure different aspects of your pipeline, such as the pipeline runner that will execute your pipeline and any runner-specific configuration required by the chosen runner. Your pipeline options will potentially include information such as your project ID or a location for storing files.

When you run the pipeline on a runner of your choice, a copy of the

PipelineOptions will be available to your code. For example, if you add a PipelineOptions parameter

to a DoFn’s @ProcessElement method, it will be populated by the system.

2.1.1. Setting PipelineOptions from command-line arguments

While you can configure your pipeline by creating a PipelineOptions object and

setting the fields directly, the Beam SDKs include a command-line parser that

you can use to set fields in PipelineOptions using command-line arguments.

To read options from the command-line, construct your PipelineOptions object

as demonstrated in the following example code:

Use Go flags to parse command line arguments to configure your pipeline. Flags must be parsed

before beam.Init() is called.

Any Javascript object can be used as pipeline options.

One can either construct one manually, but it is also common to pass an object

created from command line options such as yargs.argv.

Pipeline options are simply an optional YAML mapping property that is a sibling to the pipeline definition itself. It will be merged with whatever options are passed on the command line.

This interprets command-line arguments that follow the format:

--<option>=<value>

Appending the method

.withValidationwill check for required command-line arguments and validate argument values.

Building your PipelineOptions this way lets you specify any of the options as

a command-line argument.

Defining flag variables this way lets you specify any of the options as a command-line argument.

Note: The WordCount example pipeline demonstrates how to set pipeline options at runtime by using command-line options.

2.1.2. Creating custom options

You can add your own custom options in addition to the standard

PipelineOptions.

To add your own options, define an interface with getter and setter methods for each option.

The following example shows how to addinput and output custom options:You can also specify a description, which appears when a user passes --help as

a command-line argument, and a default value.

You set the description and default value using annotations, as follows:

public interface MyOptions extends PipelineOptions {

@Description("Input for the pipeline")

@Default.String("gs://my-bucket/input")

String getInput();

void setInput(String input);

@Description("Output for the pipeline")

@Default.String("gs://my-bucket/output")

String getOutput();

void setOutput(String output);

}from apache_beam.options.pipeline_options import PipelineOptions

class MyOptions(PipelineOptions):

@classmethod

def _add_argparse_args(cls, parser):

parser.add_argument(

'--input',

default='gs://dataflow-samples/shakespeare/kinglear.txt',

help='The file path for the input text to process.')

parser.add_argument(

'--output', required=True, help='The path prefix for output files.')For Python, you can also simply parse your custom options with argparse; there is no need to create a separate PipelineOptions subclass.

It’s recommended that you register your interface with PipelineOptionsFactory

and then pass the interface when creating the PipelineOptions object. When you

register your interface with PipelineOptionsFactory, the --help can find

your custom options interface and add it to the output of the --help command.

PipelineOptionsFactory will also validate that your custom options are

compatible with all other registered options.

The following example code shows how to register your custom options interface

with PipelineOptionsFactory:

Now your pipeline can accept --input=value and --output=value as command-line arguments.

3. PCollections

The PCollection

PCollection

PCollection

abstraction represents a

potentially distributed, multi-element data set. You can think of a

PCollection as “pipeline” data; Beam transforms use PCollection objects as

inputs and outputs. As such, if you want to work with data in your pipeline, it

must be in the form of a PCollection.

After you’ve created your Pipeline, you’ll need to begin by creating at least

one PCollection in some form. The PCollection you create serves as the input

for the first operation in your pipeline.

3.1. Creating a PCollection

You create a PCollection by either reading data from an external source using

Beam’s Source API, or you can create a PCollection of data

stored in an in-memory collection class in your driver program. The former is

typically how a production pipeline would ingest data; Beam’s Source APIs

contain adapters to help you read from external sources like large cloud-based

files, databases, or subscription services. The latter is primarily useful for

testing and debugging purposes.

3.1.1. Reading from an external source

To read from an external source, you use one of the Beam-provided I/O

adapters. The adapters vary in their exact usage, but all of them

read from some external data source and return a PCollection whose elements

represent the data records in that source.

Each data source adapter has a Read transform; to read,

you must apply that transform to the Pipeline object itself.

place this transform in the source or transforms portion of the pipeline.

TextIO.Read

io.TextFileSource

textio.Read

textio.ReadFromText,

ReadFromText,

for example, reads from an

external text file and returns a PCollection whose elements

are of type String where each String

represents one line from the text file. Here’s how you

would apply TextIO.Read

io.TextFileSource

textio.Read

textio.ReadFromText

ReadFromText

to your Pipeline root to create

a PCollection:

public static void main(String[] args) {

// Create the pipeline.

PipelineOptions options =

PipelineOptionsFactory.fromArgs(args).create();

Pipeline p = Pipeline.create(options);

// Create the PCollection 'lines' by applying a 'Read' transform.

PCollection<String> lines = p.apply(

"ReadMyFile", TextIO.read().from("gs://some/inputData.txt"));

}See the section on I/O to learn more about how to read from the various data sources supported by the Beam SDK.

3.1.2. Creating a PCollection from in-memory data

To create a PCollection from an in-memory Java Collection, you use the

Beam-provided Create transform. Much like a data adapter’s Read, you apply

Create directly to your Pipeline object itself.

As parameters, Create accepts the Java Collection and a Coder object. The

Coder specifies how the elements in the Collection should be

encoded.

To create a PCollection from an in-memory list, you use the Beam-provided

Create transform. Apply this transform directly to your Pipeline object

itself.

To create a PCollection from an in-memory slice, you use the Beam-provided

beam.CreateList transform. Pass the pipeline scope, and the slice to this transform.

To create a PCollection from an in-memory array, you use the Beam-provided

Create transform. Apply this transform directly to your Root object.

To create a PCollection from an in-memory array, you use the Beam-provided

Create transform. Specify the elements in the pipeline itself.

The following example code shows how to create a PCollection from an in-memory

List

list

slice

array:

public static void main(String[] args) {

// Create a Java Collection, in this case a List of Strings.

final List<String> LINES = Arrays.asList(

"To be, or not to be: that is the question: ",

"Whether 'tis nobler in the mind to suffer ",

"The slings and arrows of outrageous fortune, ",

"Or to take arms against a sea of troubles, ");

// Create the pipeline.

PipelineOptions options =

PipelineOptionsFactory.fromArgs(args).create();

Pipeline p = Pipeline.create(options);

// Apply Create, passing the list and the coder, to create the PCollection.

p.apply(Create.of(LINES)).setCoder(StringUtf8Coder.of());

}lines := []string{

"To be, or not to be: that is the question: ",

"Whether 'tis nobler in the mind to suffer ",

"The slings and arrows of outrageous fortune, ",

"Or to take arms against a sea of troubles, ",

}

// Create the Pipeline object and root scope.

// It's conventional to use p as the Pipeline variable and

// s as the scope variable.

p, s := beam.NewPipelineWithRoot()

// Pass the slice to beam.CreateList, to create the pcollection.

// The scope variable s is used to add the CreateList transform

// to the pipeline.

linesPCol := beam.CreateList(s, lines)3.2. PCollection characteristics

A PCollection is owned by the specific Pipeline object for which it is

created; multiple pipelines cannot share a PCollection.

In some respects, a PCollection functions like

a Collection class. However, a PCollection can differ in a few key ways:

3.2.1. Element type

The elements of a PCollection may be of any type, but must all be of the same

type. However, to support distributed processing, Beam needs to be able to

encode each individual element as a byte string (so elements can be passed

around to distributed workers). The Beam SDKs provide a data encoding mechanism

that includes built-in encoding for commonly-used types as well as support for

specifying custom encodings as needed.

3.2.2. Element schema

In many cases, the element type in a PCollection has a structure that can be introspected.

Examples are JSON, Protocol Buffer, Avro, and database records. Schemas provide a way to

express types as a set of named fields, allowing for more-expressive aggregations.

3.2.3. Immutability

A PCollection is immutable. Once created, you cannot add, remove, or change

individual elements. A Beam Transform might process each element of a

PCollection and generate new pipeline data (as a new PCollection), but it

does not consume or modify the original input collection.

Note: Beam SDKs avoid unnecessary copying of elements, so

PCollectioncontents are logically immutable, not physically immutable. Changes to input elements may be visible to other DoFns executing within the same bundle, and may cause correctness issues. As a rule, it’s not safe to modify values provided to a DoFn.

3.2.4. Random access

A PCollection does not support random access to individual elements. Instead,

Beam Transforms consider every element in a PCollection individually.

3.2.5. Size and boundedness

A PCollection is a large, immutable “bag” of elements. There is no upper limit

on how many elements a PCollection can contain; any given PCollection might

fit in memory on a single machine, or it might represent a very large

distributed data set backed by a persistent data store.

A PCollection can be either bounded or unbounded in size. A

bounded PCollection represents a data set of a known, fixed size, while an

unbounded PCollection represents a data set of unlimited size. Whether a

PCollection is bounded or unbounded depends on the source of the data set that

it represents. Reading from a batch data source, such as a file or a database,

creates a bounded PCollection. Reading from a streaming or

continuously-updating data source, such as Pub/Sub or Kafka, creates an unbounded

PCollection (unless you explicitly tell it not to).

The bounded (or unbounded) nature of your PCollection affects how Beam

processes your data. A bounded PCollection can be processed using a batch job,

which might read the entire data set once, and perform processing in a job of

finite length. An unbounded PCollection must be processed using a streaming

job that runs continuously, as the entire collection can never be available for

processing at any one time.

Beam uses windowing to divide a continuously updating unbounded

PCollection into logical windows of finite size. These logical windows are

determined by some characteristic associated with a data element, such as a

timestamp. Aggregation transforms (such as GroupByKey and Combine) work

on a per-window basis — as the data set is generated, they process each

PCollection as a succession of these finite windows.

3.2.6. Element timestamps

Each element in a PCollection has an associated intrinsic timestamp. The

timestamp for each element is initially assigned by the Source

that creates the PCollection. Sources that create an unbounded PCollection

often assign each new element a timestamp that corresponds to when the element

was read or added.

Note: Sources that create a bounded

PCollectionfor a fixed data set also automatically assign timestamps, but the most common behavior is to assign every element the same timestamp (Long.MIN_VALUE).

Timestamps are useful for a PCollection that contains elements with an

inherent notion of time. If your pipeline is reading a stream of events, like

Tweets or other social media messages, each element might use the time the event

was posted as the element timestamp.

You can manually assign timestamps to the elements of a PCollection if the

source doesn’t do it for you. You’ll want to do this if the elements have an

inherent timestamp, but the timestamp is somewhere in the structure of the

element itself (such as a “time” field in a server log entry). Beam has

Transforms that take a PCollection as input and output an

identical PCollection with timestamps attached; see Adding

Timestamps for more information

about how to do so.

4. Transforms

Transforms are the operations in your pipeline, and provide a generic

processing framework. You provide processing logic in the form of a function

object (colloquially referred to as “user code”), and your user code is applied

to each element of an input PCollection (or more than one PCollection).

Depending on the pipeline runner and back-end that you choose, many different

workers across a cluster may execute instances of your user code in parallel.

The user code running on each worker generates the output elements that are

ultimately added to the final output PCollection that the transform produces.

Aggregation is an important concept to understand when learning about Beam’s transforms. For an introduction to aggregation, see the Basics of the Beam model Aggregation section.

The Beam SDKs contain a number of different transforms that you can apply to

your pipeline’s PCollections. These include general-purpose core transforms,

such as ParDo or Combine. There are also pre-written

composite transforms included in the SDKs, which

combine one or more of the core transforms in a useful processing pattern, such

as counting or combining elements in a collection. You can also define your own

more complex composite transforms to fit your pipeline’s exact use case.

For a more in-depth tutorial of applying various transforms in the Python SDK, please read and work through this colab notebook.

4.1. Applying transforms

To invoke a transform, you must apply it to the input PCollection. Each

transform in the Beam SDKs has a generic apply method

(or pipe operator |).

Invoking multiple Beam transforms is similar to method chaining, but with one

slight difference: You apply the transform to the input PCollection, passing

the transform itself as an argument, and the operation returns the output

PCollection.

array

In YAML, transforms are applied by listing their inputs.

This takes the general form:

If a transform has more than one (non-error) output, the various outputs can be identified by explicitly giving the output name.

For linear pipelines, this can be further simplified by implicitly determining

the inputs based on by the ordering of the transforms by designating and setting

the type to chain. For example

Because Beam uses a generic apply method for PCollection, you can both chain

transforms sequentially and also apply transforms that contain other transforms

nested within (called composite transforms in the Beam

SDKs).

It’s recommended to create a new variable for each new PCollection to

sequentially transform input data. Scopes can be used to create functions

that contain other transforms

(called composite transforms in the Beam SDKs).

How you apply your pipeline’s transforms determines the structure of your

pipeline. The best way to think of your pipeline is as a directed acyclic graph,

where PTransform nodes are subroutines that accept PCollection nodes as

inputs and emit PCollection nodes as outputs.

For example, you can chain together transforms to create a pipeline that successively modifies input data:

For example, you can successively call transforms on PCollections to modify the input data:

The graph of this pipeline looks like the following:

Figure 1: A linear pipeline with three sequential transforms.

However, note that a transform does not consume or otherwise alter the input

collection — remember that a PCollection is immutable by definition. This means

that you can apply multiple transforms to the same input PCollection to create

a branching pipeline, like so:

The graph of this branching pipeline looks like the following:

Figure 2: A branching pipeline. Two transforms are applied to a single PCollection of database table rows.

You can also build your own composite transforms that nest multiple transforms inside a single, larger transform. Composite transforms are particularly useful for building a reusable sequence of simple steps that get used in a lot of different places.

The pipe syntax allows one to apply PTransforms to tuples and dicts of

PCollections as well for those transforms accepting multiple inputs (such as

Flatten and CoGroupByKey).

PTransforms can also be applied to any PValue, which include the Root object,

PCollections, arrays of PValues, and objects with PValue values.

One can apply transforms to these composite types by wrapping them with

beam.P, e.g.

beam.P({left: pcollA, right: pcollB}).apply(transformExpectingTwoPCollections).

PTransforms come in two flavors, synchronous and asynchronous, depending on

whether their application* involves asynchronous invocations.

An AsyncTransform must be applied with applyAsync and returns a Promise

which must be awaited before further pipeline construction.

4.2. Core Beam transforms

Beam provides the following core transforms, each of which represents a different processing paradigm:

ParDoGroupByKeyCoGroupByKeyCombineFlattenPartition

The Typescript SDK provides some of the most basic of these transforms

as methods on PCollection itself.

4.2.1. ParDo

ParDo is a Beam transform for generic parallel processing. The ParDo

processing paradigm is similar to the “Map” phase of a

Map/Shuffle/Reduce-style

algorithm: a ParDo transform considers each element in the input

PCollection, performs some processing function (your user code) on that

element, and emits zero, one, or multiple elements to an output PCollection.

ParDo is useful for a variety of common data processing operations, including:

- Filtering a data set. You can use

ParDoto consider each element in aPCollectionand either output that element to a new collection or discard it. - Formatting or type-converting each element in a data set. If your input

PCollectioncontains elements that are of a different type or format than you want, you can useParDoto perform a conversion on each element and output the result to a newPCollection. - Extracting parts of each element in a data set. If you have a

PCollectionof records with multiple fields, for example, you can use aParDoto parse out just the fields you want to consider into a newPCollection. - Performing computations on each element in a data set. You can use

ParDoto perform simple or complex computations on every element, or certain elements, of aPCollectionand output the results as a newPCollection.

In such roles, ParDo is a common intermediate step in a pipeline. You might

use it to extract certain fields from a set of raw input records, or convert raw

input into a different format; you might also use ParDo to convert processed

data into a format suitable for output, like database table rows or printable

strings.

When you apply a ParDo transform, you’ll need to provide user code in the form

of a DoFn object. DoFn is a Beam SDK class that defines a distributed

processing function.

In Beam YAML, ParDo operations are expressed by the MapToFields, Filter,

and Explode transform types. These types can take a UDF in the language of your

choice, rather than introducing the notion of a DoFn.

See the page on mapping fns for more details.

When you create a subclass of

DoFn, note that your subclass should adhere to the Requirements for writing user code for Beam transforms.

All DoFns should be registered using a generic register.DoFnXxY[...]

function. This allows the Go SDK to infer an encoding from any inputs/outputs,

registers the DoFn for execution on remote runners, and optimizes the runtime

execution of the DoFns via reflection.

// ComputeWordLengthFn is a DoFn that computes the word length of string elements.

type ComputeWordLengthFn struct{}

// ProcessElement computes the length of word and emits the result.

// When creating structs as a DoFn, the ProcessElement method performs the

// work of this step in the pipeline.

func (fn *ComputeWordLengthFn) ProcessElement(ctx context.Context, word string) int {

...

}

func init() {

// 2 inputs and 1 output => DoFn2x1

// Input/output types are included in order in the brackets

register.DoFn2x1[context.Context, string, int](&ComputeWordLengthFn{})

}4.2.1.1. Applying ParDo

Like all Beam transforms, you apply ParDo by calling the apply method on the

input PCollection and passing ParDo as an argument, as shown in the

following example code:

Like all Beam transforms, you apply ParDo by calling the beam.ParDo on the

input PCollection and passing the DoFn as an argument, as shown in the

following example code:

beam.ParDo applies the passed in DoFn argument to the input PCollection,

as shown in the following example code:

// The input PCollection of Strings.

PCollection<String> words = ...;

// The DoFn to perform on each element in the input PCollection.

static class ComputeWordLengthFn extends DoFn<String, Integer> { ... }

// Apply a ParDo to the PCollection "words" to compute lengths for each word.

PCollection<Integer> wordLengths = words.apply(

ParDo

.of(new ComputeWordLengthFn())); // The DoFn to perform on each element, which

// we define above.

# The input PCollection of Strings.

words = ...

# The DoFn to perform on each element in the input PCollection.

class ComputeWordLengthFn(beam.DoFn):

def process(self, element):

return [len(element)]

# Apply a ParDo to the PCollection "words" to compute lengths for each word.

word_lengths = words | beam.ParDo(ComputeWordLengthFn())// ComputeWordLengthFn is the DoFn to perform on each element in the input PCollection.

type ComputeWordLengthFn struct{}

// ProcessElement is the method to execute for each element.

func (fn *ComputeWordLengthFn) ProcessElement(word string, emit func(int)) {

emit(len(word))

}

// DoFns must be registered with beam.

func init() {

beam.RegisterType(reflect.TypeOf((*ComputeWordLengthFn)(nil)))

// 2 inputs and 0 outputs => DoFn2x0

// 1 input => Emitter1

// Input/output types are included in order in the brackets

register.DoFn2x0[string, func(int)](&ComputeWordLengthFn{})

register.Emitter1[int]()

}

// words is an input PCollection of strings

var words beam.PCollection = ...

wordLengths := beam.ParDo(s, &ComputeWordLengthFn{}, words)# The input PCollection of Strings.

const words : PCollection<string> = ...

# The DoFn to perform on each element in the input PCollection.

function computeWordLengthFn(): beam.DoFn<string, number> {

return {

process: function* (element) {

yield element.length;

},

};

}

const result = words.apply(beam.parDo(computeWordLengthFn()));In the example, our input PCollection contains String

string values. We apply a

ParDo transform that specifies a function (ComputeWordLengthFn) to compute

the length of each string, and outputs the result to a new PCollection of

Integer

int values that stores the length of each word.

4.2.1.2. Creating a DoFn

The DoFn object that you pass to ParDo contains the processing logic that

gets applied to the elements in the input collection. When you use Beam, often

the most important pieces of code you’ll write are these DoFns - they’re what

define your pipeline’s exact data processing tasks.

Note: When you create your

DoFn, be mindful of the Requirements for writing user code for Beam transforms and ensure that your code follows them. You should avoid time-consuming operations such as reading large files inDoFn.Setup.

A DoFn processes one element at a time from the input PCollection. When you

create a subclass of DoFn, you’ll need to provide type parameters that match

the types of the input and output elements. If your DoFn processes incoming

String elements and produces Integer elements for the output collection

(like our previous example, ComputeWordLengthFn), your class declaration would

look like this:

A DoFn processes one element at a time from the input PCollection. When you

create a DoFn struct, you’ll need to provide type parameters that match

the types of the input and output elements in a ProcessElement method.

If your DoFn processes incoming string elements and produces int elements

for the output collection (like our previous example, ComputeWordLengthFn), your dofn could

look like this:

// ComputeWordLengthFn is a DoFn that computes the word length of string elements.

type ComputeWordLengthFn struct{}

// ProcessElement computes the length of word and emits the result.

// When creating structs as a DoFn, the ProcessElement method performs the

// work of this step in the pipeline.

func (fn *ComputeWordLengthFn) ProcessElement(word string, emit func(int)) {

...

}

func init() {

// 2 inputs and 0 outputs => DoFn2x0

// 1 input => Emitter1

// Input/output types are included in order in the brackets

register.Function2x0(&ComputeWordLengthFn{})

register.Emitter1[int]()

}Inside your DoFn subclass, you’ll write a method annotated with

@ProcessElement where you provide the actual processing logic. You don’t need

to manually extract the elements from the input collection; the Beam SDKs handle

that for you. Your @ProcessElement method should accept a parameter tagged with

@Element, which will be populated with the input element. In order to output

elements, the method can also take a parameter of type OutputReceiver which

provides a method for emitting elements. The parameter types must match the input

and output types of your DoFn or the framework will raise an error. Note: @Element and

OutputReceiver were introduced in Beam 2.5.0; if using an earlier release of Beam, a

ProcessContext parameter should be used instead.

Inside your DoFn subclass, you’ll write a method process where you provide

the actual processing logic. You don’t need to manually extract the elements

from the input collection; the Beam SDKs handle that for you. Your process method

should accept an argument element, which is the input element, and return an

iterable with its output values. You can accomplish this by emitting individual

elements with yield statements, and use yield from to emit all elements from

an iterable, such as a list or a generator. Using return statement

with an iterable is also acceptable as long as you don’t mix yield and

return statements in the same process method, since that leads to incorrect behavior.

For your DoFn type, you’ll write a method ProcessElement where you provide

the actual processing logic. You don’t need to manually extract the elements

from the input collection; the Beam SDKs handle that for you. Your ProcessElement method

should accept a parameter element, which is the input element. In order to output elements,

the method can also take a function parameter, which can be called to emit elements.

The parameter types must match the input and output types of your DoFn

or the framework will raise an error.

// ComputeWordLengthFn is the DoFn to perform on each element in the input PCollection.

type ComputeWordLengthFn struct{}

// ProcessElement is the method to execute for each element.

func (fn *ComputeWordLengthFn) ProcessElement(word string, emit func(int)) {

emit(len(word))

}

// DoFns must be registered with beam.

func init() {

beam.RegisterType(reflect.TypeOf((*ComputeWordLengthFn)(nil)))

// 2 inputs and 0 outputs => DoFn2x0

// 1 input => Emitter1

// Input/output types are included in order in the brackets

register.DoFn2x0[string, func(int)](&ComputeWordLengthFn{})

register.Emitter1[int]()

}Simple DoFns can also be written as functions.

Note: Whether using a structural

DoFntype or a functionalDoFn, they should be registered with beam in aninitblock. Otherwise they may not execute on distributed runners.

Note: If the elements in your input

PCollectionare key/value pairs, you can access the key or value by usingelement.getKey()orelement.getValue(), respectively.

Note: If the elements in your input

PCollectionare key/value pairs, your process element method must have two parameters, for each of the key and value, respectively. Similarly, key/value pairs are also output as separate parameters to a singleemitter function.

Proper use of return vs yield in Python Functions.

Returning a single element (e.g.,

return element) is incorrect Theprocessmethod in Beam must return an iterable of elements. Returning a single value like an integer or string (e.g.,return element) leads to a runtime error (TypeError: 'int' object is not iterable) or incorrect results since the return value will be treated as an iterable. Always ensure your return type is iterable.

# Incorrectly Returning a single string instead of a sequence

class ReturnIndividualElement(beam.DoFn):

def process(self, element):

return element

with beam.Pipeline() as pipeline:

(

pipeline

| "CreateExamples" >> beam.Create(["foo"])

| "MapIncorrect" >> beam.ParDo(ReturnIndividualElement())

| "Print" >> beam.Map(print)

)

# prints:

# f

# o

# oReturning a list (e.g.,

return [element1, element2]) is valid because List is Iterable This approach works well when emitting multiple outputs from a single call and is easy to read for small datasets.

# Returning a list of strings

class ReturnWordsFn(beam.DoFn):

def process(self, element):

# Split the sentence and return all words longer than 2 characters as a list

return [word for word in element.split() if len(word) > 2]

with beam.Pipeline() as pipeline:

(

pipeline

| "CreateSentences_Return" >> beam.Create([ # Create a collection of sentences

"Apache Beam is powerful", # Sentence 1

"Try it now" # Sentence 2

])

| "SplitWithReturn" >> beam.ParDo(ReturnWordsFn()) # Apply the custom DoFn to split words

| "PrintWords_Return" >> beam.Map(print) # Print each List of words

)

# prints:

# Apache

# Beam

# powerful

# Try

# nowUsing

yield(e.g.,yield element) is also valid This approach can be useful for generating multiple outputs more flexibly, especially in cases where conditional logic or loops are involved.

# Yielding each line one at a time

class YieldWordsFn(beam.DoFn):

def process(self, element):

# Splitting the sentence and yielding words that have more than 2 characters

for word in element.split():

if len(word) > 2:

yield word

with beam.Pipeline() as pipeline:

(

pipeline

| "CreateSentences_Yield" >> beam.Create([ # Create a collection of sentences

"Apache Beam is powerful", # Sentence 1

"Try it now" # Sentence 2

])

| "SplitWithYield" >> beam.ParDo(YieldWordsFn()) # Apply the custom DoFn to split words

| "PrintWords_Yield" >> beam.Map(print) # Print each word

)

# prints:

# Apache

# Beam

# powerful

# Try

# nowA given DoFn instance generally gets invoked one or more times to process some

arbitrary bundle of elements. However, Beam doesn’t guarantee an exact number of

invocations; it may be invoked multiple times on a given worker node to account

for failures and retries. As such, you can cache information across multiple

calls to your processing method, but if you do so, make sure the implementation

does not depend on the number of invocations.

In your processing method, you’ll also need to meet some immutability requirements to ensure that Beam and the processing back-end can safely serialize and cache the values in your pipeline. Your method should meet the following requirements:

- You should not in any way modify an element returned by

the

@Elementannotation orProcessContext.sideInput()(the incoming elements from the input collection). - Once you output a value using

OutputReceiver.output()you should not modify that value in any way.

- You should not in any way modify the

elementargument provided to theprocessmethod, or any side inputs. - Once you output a value using

yieldorreturn, you should not modify that value in any way.

- You should not in any way modify the parameters provided to the

ProcessElementmethod, or any side inputs. - Once you output a value using an

emitter function, you should not modify that value in any way.

4.2.1.3. Lightweight DoFns and other abstractions

If your function is relatively straightforward, you can simplify your use of

ParDo by providing a lightweight DoFn in-line, as

an anonymous inner class instance

a lambda function

an anonymous function

a function passed to PCollection.map or PCollection.flatMap.

Here’s the previous example, ParDo with ComputeLengthWordsFn, with the

DoFn specified as

an anonymous inner class instance

a lambda function

an anonymous function

a function:

// The input PCollection.

PCollection<String> words = ...;

// Apply a ParDo with an anonymous DoFn to the PCollection words.

// Save the result as the PCollection wordLengths.

PCollection<Integer> wordLengths = words.apply(

"ComputeWordLengths", // the transform name

ParDo.of(new DoFn<String, Integer>() { // a DoFn as an anonymous inner class instance

@ProcessElement

public void processElement(@Element String word, OutputReceiver<Integer> out) {

out.output(word.length());

}

}));If your ParDo performs a one-to-one mapping of input elements to output

elements–that is, for each input element, it applies a function that produces

exactly one output element, you can return that

element directly.you can use the higher-level

MapElementsMap

transform.MapElements can accept an anonymous

Java 8 lambda function for additional brevity.

Here’s the previous example using MapElements

Mapa direct return:

// The input PCollection.

PCollection<String> words = ...;

// Apply a MapElements with an anonymous lambda function to the PCollection words.

// Save the result as the PCollection wordLengths.

PCollection<Integer> wordLengths = words.apply(

MapElements.into(TypeDescriptors.integers())

.via((String word) -> word.length()));The Go SDK cannot support anonymous functions outside of the deprecated Go Direct runner.

func wordLengths(word string) int { return len(word) }

func init() { register.Function1x1(wordLengths) }

func applyWordLenAnon(s beam.Scope, words beam.PCollection) beam.PCollection {

return beam.ParDo(s, wordLengths, words)

}Note: You can use Java 8 lambda functions with several other Beam transforms, including

Filter,FlatMapElements, andPartition.

Note: Anonymous function DoFns do not work on distributed runners. It’s recommended to use named functions and register them with

register.FunctionXxYin aninit()block.

4.2.1.4. DoFn lifecycle

Here is a sequence diagram that shows the lifecycle of the DoFn during the execution of the ParDo transform. The comments give useful information to pipeline developers such as the constraints that apply to the objects or particular cases such as failover or instance reuse. They also give instantiation use cases. Three key points to note are that:

- Teardown is done on a best effort basis and thus isn’t guaranteed.

- The number of DoFn instances created at runtime is runner-dependent.

- For the Python SDK, the pipeline contents such as DoFn user code,

is serialized into a bytecode. Therefore,

DoFns should not reference objects that are not serializable, such as locks. To manage a single instance of an object across multipleDoFninstances in the same process, use utilities in the shared.py module.

4.2.2. GroupByKey

GroupByKey is a Beam transform for processing collections of key/value pairs.

It’s a parallel reduction operation, analogous to the Shuffle phase of a

Map/Shuffle/Reduce-style algorithm. The input to GroupByKey is a collection of

key/value pairs that represents a multimap, where the collection contains

multiple pairs that have the same key, but different values. Given such a

collection, you use GroupByKey to collect all of the values associated with

each unique key.

GroupByKey is a good way to aggregate data that has something in common. For

example, if you have a collection that stores records of customer orders, you

might want to group together all the orders from the same postal code (wherein

the “key” of the key/value pair is the postal code field, and the “value” is the

remainder of the record).

Let’s examine the mechanics of GroupByKey with a simple example case, where

our data set consists of words from a text file and the line number on which

they appear. We want to group together all the line numbers (values) that share

the same word (key), letting us see all the places in the text where a

particular word appears.

Our input is a PCollection of key/value pairs where each word is a key, and

the value is a line number in the file where the word appears. Here’s a list of

the key/value pairs in the input collection:

cat, 1

dog, 5

and, 1

jump, 3

tree, 2

cat, 5

dog, 2

and, 2

cat, 9

and, 6

...

GroupByKey gathers up all the values with the same key and outputs a new pair

consisting of the unique key and a collection of all of the values that were

associated with that key in the input collection. If we apply GroupByKey to

our input collection above, the output collection would look like this:

cat, [1,5,9]

dog, [5,2]

and, [1,2,6]

jump, [3]

tree, [2]

...

Thus, GroupByKey represents a transform from a multimap (multiple keys to

individual values) to a uni-map (unique keys to collections of values).

Using GroupByKey is straightforward:

While all SDKs have a GroupByKey transform, using GroupBy is

generally more natural.

The GroupBy transform can be parameterized by the name(s) of properties

on which to group the elements of the PCollection, or a function taking

the each element as input that maps to a key on which to do grouping.

// A PCollection of elements like

// {word: "cat", score: 1}, {word: "dog", score: 5}, {word: "cat", score: 5}, ...

const scores : PCollection<{word: string, score: number}> = ...

// This will produce a PCollection with elements like

// {key: "cat", value: [{ word: "cat", score: 1 },

// { word: "cat", score: 5 }, ...]}

// {key: "dog", value: [{ word: "dog", score: 5 }, ...]}

const grouped_by_word = scores.apply(beam.groupBy("word"));

// This will produce a PCollection with elements like

// {key: 3, value: [{ word: "cat", score: 1 },

// { word: "dog", score: 5 },

// { word: "cat", score: 5 }, ...]}

const by_word_length = scores.apply(beam.groupBy((x) => x.word.length));4.2.2.1 GroupByKey and unbounded PCollections

If you are using unbounded PCollections, you must use either non-global

windowing or an

aggregation trigger in order to perform a GroupByKey or

CoGroupByKey. This is because a bounded GroupByKey or

CoGroupByKey must wait for all the data with a certain key to be collected,

but with unbounded collections, the data is unlimited. Windowing and/or triggers

allow grouping to operate on logical, finite bundles of data within the

unbounded data streams.

If you do apply GroupByKey or CoGroupByKey to a group of unbounded

PCollections without setting either a non-global windowing strategy, a trigger

strategy, or both for each collection, Beam generates an IllegalStateException

error at pipeline construction time.

When using GroupByKey or CoGroupByKey to group PCollections that have a

windowing strategy applied, all of the PCollections you want to

group must use the same windowing strategy and window sizing. For example, all

of the collections you are merging must use (hypothetically) identical 5-minute

fixed windows, or 4-minute sliding windows starting every 30 seconds.

If your pipeline attempts to use GroupByKey or CoGroupByKey to merge

PCollections with incompatible windows, Beam generates an

IllegalStateException error at pipeline construction time.

4.2.3. CoGroupByKey

CoGroupByKey performs a relational join of two or more key/value

PCollections that have the same key type.

Design Your Pipeline

shows an example pipeline that uses a join.

Consider using CoGroupByKey if you have multiple data sets that provide

information about related things. For example, let’s say you have two different

files with user data: one file has names and email addresses; the other file

has names and phone numbers. You can join those two data sets, using the user

name as a common key and the other data as the associated values. After the

join, you have one data set that contains all of the information (email

addresses and phone numbers) associated with each name.

One can also consider using SqlTransform to perform a join.

If you are using unbounded PCollections, you must use either non-global

windowing or an

aggregation trigger in order to perform a CoGroupByKey. See

GroupByKey and unbounded PCollections

for more details.

In the Beam SDK for Java, CoGroupByKey accepts a tuple of keyed

PCollections (PCollection<KV<K, V>>) as input. For type safety, the SDK

requires you to pass each PCollection as part of a KeyedPCollectionTuple.

You must declare a TupleTag for each input PCollection in the

KeyedPCollectionTuple that you want to pass to CoGroupByKey. As output,

CoGroupByKey returns a PCollection<KV<K, CoGbkResult>>, which groups values

from all the input PCollections by their common keys. Each key (all of type

K) will have a different CoGbkResult, which is a map from TupleTag<T> to

Iterable<T>. You can access a specific collection in an CoGbkResult object

by using the TupleTag that you supplied with the initial collection.

In the Beam SDK for Python, CoGroupByKey accepts a dictionary of keyed

PCollections as input. As output, CoGroupByKey creates a single output

PCollection that contains one key/value tuple for each key in the input

PCollections. Each key’s value is a dictionary that maps each tag to an

iterable of the values under they key in the corresponding PCollection.

In the Beam Go SDK, CoGroupByKey accepts an arbitrary number of

PCollections as input. As output, CoGroupByKey creates a single output

PCollection that groups each key with value iterator functions for each

input PCollection. The iterator functions map to input PCollections in

the same order they were provided to the CoGroupByKey.

The following conceptual examples use two input collections to show the mechanics of

CoGroupByKey.

The first set of data has a TupleTag<String> called emailsTag and contains names

and email addresses. The second set of data has a TupleTag<String> called

phonesTag and contains names and phone numbers.

The first set of data contains names and email addresses. The second set of data contains names and phone numbers.

final List<KV<String, String>> emailsList =

Arrays.asList(

KV.of("amy", "amy@example.com"),

KV.of("carl", "carl@example.com"),

KV.of("julia", "julia@example.com"),

KV.of("carl", "carl@email.com"));

final List<KV<String, String>> phonesList =

Arrays.asList(

KV.of("amy", "111-222-3333"),

KV.of("james", "222-333-4444"),

KV.of("amy", "333-444-5555"),

KV.of("carl", "444-555-6666"));

PCollection<KV<String, String>> emails = p.apply("CreateEmails", Create.of(emailsList));

PCollection<KV<String, String>> phones = p.apply("CreatePhones", Create.of(phonesList));emails_list = [

('amy', 'amy@example.com'),

('carl', 'carl@example.com'),

('julia', 'julia@example.com'),

('carl', 'carl@email.com'),

]

phones_list = [

('amy', '111-222-3333'),

('james', '222-333-4444'),

('amy', '333-444-5555'),

('carl', '444-555-6666'),

]

emails = p | 'CreateEmails' >> beam.Create(emails_list)

phones = p | 'CreatePhones' >> beam.Create(phones_list)type stringPair struct {

K, V string

}

func splitStringPair(e stringPair) (string, string) {

return e.K, e.V

}

func init() {

// Register DoFn.

register.Function1x2(splitStringPair)

}

// CreateAndSplit is a helper function that creates

func CreateAndSplit(s beam.Scope, input []stringPair) beam.PCollection {

initial := beam.CreateList(s, input)

return beam.ParDo(s, splitStringPair, initial)

}

var emailSlice = []stringPair{

{"amy", "amy@example.com"},

{"carl", "carl@example.com"},

{"julia", "julia@example.com"},

{"carl", "carl@email.com"},

}

var phoneSlice = []stringPair{

{"amy", "111-222-3333"},

{"james", "222-333-4444"},

{"amy", "333-444-5555"},

{"carl", "444-555-6666"},

}

emails := CreateAndSplit(s.Scope("CreateEmails"), emailSlice)

phones := CreateAndSplit(s.Scope("CreatePhones"), phoneSlice)const emails_list = [

{ name: "amy", email: "amy@example.com" },

{ name: "carl", email: "carl@example.com" },

{ name: "julia", email: "julia@example.com" },

{ name: "carl", email: "carl@email.com" },

];

const phones_list = [

{ name: "amy", phone: "111-222-3333" },

{ name: "james", phone: "222-333-4444" },

{ name: "amy", phone: "333-444-5555" },

{ name: "carl", phone: "444-555-6666" },

];

const emails = root.apply(

beam.withName("createEmails", beam.create(emails_list))

);

const phones = root.apply(

beam.withName("createPhones", beam.create(phones_list))

);- type: Create

name: CreateEmails

config:

elements:

- { name: "amy", email: "amy@example.com" }

- { name: "carl", email: "carl@example.com" }

- { name: "julia", email: "julia@example.com" }

- { name: "carl", email: "carl@email.com" }

- type: Create

name: CreatePhones

config:

elements:

- { name: "amy", phone: "111-222-3333" }

- { name: "james", phone: "222-333-4444" }

- { name: "amy", phone: "333-444-5555" }

- { name: "carl", phone: "444-555-6666" }After CoGroupByKey, the resulting data contains all data associated with each

unique key from any of the input collections.

final TupleTag<String> emailsTag = new TupleTag<>();

final TupleTag<String> phonesTag = new TupleTag<>();

final List<KV<String, CoGbkResult>> expectedResults =

Arrays.asList(

KV.of(

"amy",

CoGbkResult.of(emailsTag, Arrays.asList("amy@example.com"))

.and(phonesTag, Arrays.asList("111-222-3333", "333-444-5555"))),

KV.of(

"carl",

CoGbkResult.of(emailsTag, Arrays.asList("carl@email.com", "carl@example.com"))

.and(phonesTag, Arrays.asList("444-555-6666"))),

KV.of(

"james",

CoGbkResult.of(emailsTag, Arrays.asList())

.and(phonesTag, Arrays.asList("222-333-4444"))),

KV.of(

"julia",

CoGbkResult.of(emailsTag, Arrays.asList("julia@example.com"))

.and(phonesTag, Arrays.asList())));results = [

(

'amy',

{

'emails': ['amy@example.com'],

'phones': ['111-222-3333', '333-444-5555']

}),

(

'carl',

{

'emails': ['carl@email.com', 'carl@example.com'],

'phones': ['444-555-6666']

}),

('james', {

'emails': [], 'phones': ['222-333-4444']

}),

('julia', {

'emails': ['julia@example.com'], 'phones': []

}),

]results := beam.CoGroupByKey(s, emails, phones)

contactLines := beam.ParDo(s, formatCoGBKResults, results)

// Synthetic example results of a cogbk.

results := []struct {

Key string

Emails, Phones []string

}{

{

Key: "amy",

Emails: []string{"amy@example.com"},

Phones: []string{"111-222-3333", "333-444-5555"},

}, {

Key: "carl",

Emails: []string{"carl@email.com", "carl@example.com"},

Phones: []string{"444-555-6666"},

}, {

Key: "james",

Emails: []string{},

Phones: []string{"222-333-4444"},

}, {

Key: "julia",

Emails: []string{"julia@example.com"},

Phones: []string{},

},

}const results = [

{

name: "amy",

values: {

emails: [{ name: "amy", email: "amy@example.com" }],

phones: [

{ name: "amy", phone: "111-222-3333" },

{ name: "amy", phone: "333-444-5555" },

],

},

},

{

name: "carl",

values: {

emails: [

{ name: "carl", email: "carl@example.com" },

{ name: "carl", email: "carl@email.com" },

],

phones: [{ name: "carl", phone: "444-555-6666" }],

},

},

{

name: "james",

values: {

emails: [],

phones: [{ name: "james", phone: "222-333-4444" }],

},

},

{

name: "julia",

values: {

emails: [{ name: "julia", email: "julia@example.com" }],

phones: [],

},

},

];The following code example joins the two PCollections with CoGroupByKey,

followed by a ParDo to consume the result. Then, the code uses tags to look up

and format data from each collection.

The following code example joins the two PCollections with CoGroupByKey,

followed by a ParDo to consume the result. The ordering of the DoFn iterator

parameters maps to the ordering of the CoGroupByKey inputs.

PCollection<KV<String, CoGbkResult>> results =

KeyedPCollectionTuple.of(emailsTag, emails)

.and(phonesTag, phones)

.apply(CoGroupByKey.create());

PCollection<String> contactLines =

results.apply(

ParDo.of(

new DoFn<KV<String, CoGbkResult>, String>() {

@ProcessElement

public void processElement(ProcessContext c) {

KV<String, CoGbkResult> e = c.element();

String name = e.getKey();

Iterable<String> emailsIter = e.getValue().getAll(emailsTag);

Iterable<String> phonesIter = e.getValue().getAll(phonesTag);

String formattedResult =

Snippets.formatCoGbkResults(name, emailsIter, phonesIter);

c.output(formattedResult);

}

}));# The result PCollection contains one key-value element for each key in the

# input PCollections. The key of the pair will be the key from the input and

# the value will be a dictionary with two entries: 'emails' - an iterable of

# all values for the current key in the emails PCollection and 'phones': an

# iterable of all values for the current key in the phones PCollection.

results = ({'emails': emails, 'phones': phones} | beam.CoGroupByKey())

def join_info(name_info):

(name, info) = name_info

return '%s; %s; %s' %\

(name, sorted(info['emails']), sorted(info['phones']))

contact_lines = results | beam.Map(join_info)func formatCoGBKResults(key string, emailIter, phoneIter func(*string) bool) string {

var s string

var emails, phones []string

for emailIter(&s) {

emails = append(emails, s)

}

for phoneIter(&s) {

phones = append(phones, s)

}

// Values have no guaranteed order, sort for deterministic output.

sort.Strings(emails)

sort.Strings(phones)

return fmt.Sprintf("%s; %s; %s", key, formatStringIter(emails), formatStringIter(phones))

}

func init() {

register.Function3x1(formatCoGBKResults)

// 1 input of type string => Iter1[string]

register.Iter1[string]()

}

// Synthetic example results of a cogbk.

results := []struct {

Key string

Emails, Phones []string

}{

{

Key: "amy",

Emails: []string{"amy@example.com"},

Phones: []string{"111-222-3333", "333-444-5555"},

}, {

Key: "carl",

Emails: []string{"carl@email.com", "carl@example.com"},

Phones: []string{"444-555-6666"},

}, {

Key: "james",

Emails: []string{},

Phones: []string{"222-333-4444"},

}, {

Key: "julia",

Emails: []string{"julia@example.com"},

Phones: []string{},

},

}- type: MapToFields

name: PrepareEmails

input: CreateEmails

config:

language: python

fields:

name: name

email: "[email]"

phone: "[]"

- type: MapToFields

name: PreparePhones

input: CreatePhones

config:

language: python

fields:

name: name

email: "[]"

phone: "[phone]"

- type: Combine

name: CoGropuBy

input: [PrepareEmails, PreparePhones]

config:

group_by: [name]

combine:

email: concat

phone: concat

- type: MapToFields

name: FormatResults

input: CoGropuBy

config:

language: python

fields:

formatted:

"'%s; %s; %s' % (name, sorted(email), sorted(phone))"The formatted data looks like this:

4.2.4. Combine

Combine

Combine

Combine

Combine

is a Beam transform for combining collections of elements or values in your

data. Combine has variants that work on entire PCollections, and some that

combine the values for each key in PCollections of key/value pairs.

When you apply a Combine transform, you must provide the function that

contains the logic for combining the elements or values. The combining function

should be commutative and associative, as the function is not necessarily

invoked exactly once on all values with a given key. Because the input data

(including the value collection) may be distributed across multiple workers, the

combining function might be called multiple times to perform partial combining

on subsets of the value collection. The Beam SDK also provides some pre-built

combine functions for common numeric combination operations such as sum, min,

and max.

Simple combine operations, such as sums, can usually be implemented as a simple

function. More complex combination operations might require you to create a

subclass of CombineFn

that has an accumulation type distinct from the input/output type.

The associativity and commutativity of a CombineFn allows runners to

automatically apply some optimizations:

- Combiner lifting: This is the most significant optimization. Input elements are combined per key and window before they are shuffled, so the volume of data shuffled might be reduced by many orders of magnitude. Another term for this optimization is “mapper-side combine.”

- Incremental combining: When you have a

CombineFnthat reduces the data size by a lot, it is useful to combine elements as they emerge from a streaming shuffle. This spreads out the cost of doing combines over the time that your streaming computation might be idle. Incremental combining also reduces the storage of intermediate accumulators.

4.2.4.1. Simple combinations using simple functions

Beam YAML has the following buit-in CombineFns: count, sum, min, max, mean, any, all, group, and concat. CombineFns from other languages can also be referenced as described in the (full docs on aggregation)[https://beam.apache.org/documentation/sdks/yaml-combine/]. The following example code shows a simple combine function. Combining is done by modifying a grouping transform with the `combining` method. This method takes three parameters: the value to combine (either as a named property of the input elements, or a function of the entire input), the combining operation (either a binary function or a `CombineFn`), and finally a name for the combined value in the output object.// Sum a collection of Integer values. The function SumInts implements the interface SerializableFunction.

public static class SumInts implements SerializableFunction<Iterable<Integer>, Integer> {

@Override

public Integer apply(Iterable<Integer> input) {

int sum = 0;

for (int item : input) {

sum += item;

}

return sum;

}

}func sumInts(a, v int) int {

return a + v

}

func init() {

register.Function2x1(sumInts)

}

func globallySumInts(s beam.Scope, ints beam.PCollection) beam.PCollection {

return beam.Combine(s, sumInts, ints)

}

type boundedSum struct {

Bound int

}

func (fn *boundedSum) MergeAccumulators(a, v int) int {

sum := a + v

if fn.Bound > 0 && sum > fn.Bound {

return fn.Bound

}

return sum

}

func init() {

register.Combiner1[int](&boundedSum{})

}

func globallyBoundedSumInts(s beam.Scope, bound int, ints beam.PCollection) beam.PCollection {

return beam.Combine(s, &boundedSum{Bound: bound}, ints)

}All Combiners should be registered using a generic register.CombinerX[...]

function. This allows the Go SDK to infer an encoding from any inputs/outputs,

registers the Combiner for execution on remote runners, and optimizes the runtime

execution of the Combiner via reflection.

Combiner1 should be used when your accumulator, input, and output are all of the

same type. It can be called with register.Combiner1[T](&CustomCombiner{}) where T

is the type of the input/accumulator/output.

Combiner2 should be used when your accumulator, input, and output are 2 distinct

types. It can be called with register.Combiner2[T1, T2](&CustomCombiner{}) where

T1 is the type of the accumulator and T2 is the other type.

Combiner3 should be used when your accumulator, input, and output are 3 distinct

types. It can be called with register.Combiner3[T1, T2, T3](&CustomCombiner{})

where T1 is the type of the accumulator, T2 is the type of the input, and T3 is

the type of the output.

4.2.4.2. Advanced combinations using CombineFn

For more complex combine functions, you can define a

subclass ofCombineFn.

You should use a CombineFn if the combine function requires a more sophisticated

accumulator, must perform additional pre- or post-processing, might change the

output type, or takes the key into account.

A general combining operation consists of five operations. When you create a

subclass of

CombineFn, you must provide five operations by overriding the

corresponding methods. Only MergeAccumulators is a required method. The

others will have a default interpretation based on the accumulator type. The

lifecycle methods are:

Create Accumulator creates a new “local” accumulator. In the example case, taking a mean average, a local accumulator tracks the running sum of values (the numerator value for our final average division) and the number of values summed so far (the denominator value). It may be called any number of times in a distributed fashion.

Add Input adds an input element to an accumulator, returning the accumulator value. In our example, it would update the sum and increment the count. It may also be invoked in parallel.

Merge Accumulators merges several accumulators into a single accumulator; this is how data in multiple accumulators is combined before the final calculation. In the case of the mean average computation, the accumulators representing each portion of the division are merged together. It may be called again on its outputs any number of times.

Extract Output performs the final computation. In the case of computing a mean average, this means dividing the combined sum of all the values by the number of values summed. It is called once on the final, merged accumulator.

Compact returns a more compact represenation of the accumulator. This is called before an accumulator is sent across the wire, and can be useful in cases where values are buffered or otherwise lazily kept unprocessed when added to the accumulator. Compact should return an equivalent, though possibly modified, accumulator. In most cases, Compact is not necessary. For a real world example of using Compact, see the Python SDK implementation of TopCombineFn

The following example code shows how to define a CombineFn that computes a

mean average:

public class AverageFn extends CombineFn<Integer, AverageFn.Accum, Double> {

public static class Accum {

int sum = 0;

int count = 0;

}

@Override

public Accum createAccumulator() { return new Accum(); }

@Override

public Accum addInput(Accum accum, Integer input) {

accum.sum += input;

accum.count++;

return accum;

}

@Override

public Accum mergeAccumulators(Iterable<Accum> accums) {

Accum merged = createAccumulator();

for (Accum accum : accums) {

merged.sum += accum.sum;

merged.count += accum.count;

}

return merged;

}

@Override

public Double extractOutput(Accum accum) {

return ((double) accum.sum) / accum.count;

}

// No-op

@Override

public Accum compact(Accum accum) { return accum; }

}pc = ...

class AverageFn(beam.CombineFn):

def create_accumulator(self):

return (0.0, 0)

def add_input(self, sum_count, input):

(sum, count) = sum_count

return sum + input, count + 1

def merge_accumulators(self, accumulators):

sums, counts = zip(*accumulators)

return sum(sums), sum(counts)

def extract_output(self, sum_count):

(sum, count) = sum_count

return sum / count if count else float('NaN')

def compact(self, accumulator):

# No-op

return accumulatortype averageFn struct{}

type averageAccum struct {

Count, Sum int

}

func (fn *averageFn) CreateAccumulator() averageAccum {

return averageAccum{0, 0}

}

func (fn *averageFn) AddInput(a averageAccum, v int) averageAccum {

return averageAccum{Count: a.Count + 1, Sum: a.Sum + v}

}

func (fn *averageFn) MergeAccumulators(a, v averageAccum) averageAccum {

return averageAccum{Count: a.Count + v.Count, Sum: a.Sum + v.Sum}

}

func (fn *averageFn) ExtractOutput(a averageAccum) float64 {

if a.Count == 0 {

return math.NaN()

}

return float64(a.Sum) / float64(a.Count)

}

func (fn *averageFn) Compact(a averageAccum) averageAccum {

// No-op

return a

}

func init() {

register.Combiner3[averageAccum, int, float64](&averageFn{})

}const meanCombineFn: beam.CombineFn<number, [number, number], number> =

{

createAccumulator: () => [0, 0],

addInput: ([sum, count]: [number, number], i: number) => [

sum + i,

count + 1,

],

mergeAccumulators: (accumulators: [number, number][]) =>

accumulators.reduce(([sum0, count0], [sum1, count1]) => [

sum0 + sum1,

count0 + count1,

]),

extractOutput: ([sum, count]: [number, number]) => sum / count,

};4.2.4.3. Combining a PCollection into a single value

Use the global combine to transform all of the elements in a given PCollection

into a single value, represented in your pipeline as a new PCollection

containing one element. The following example code shows how to apply the Beam

provided sum combine function to produce a single sum value for a PCollection

of integers.

4.2.4.4. Combine and global windowing

If your input PCollection uses the default global windowing, the default

behavior is to return a PCollection containing one item. That item’s value

comes from the accumulator in the combine function that you specified when

applying Combine. For example, the Beam provided sum combine function returns

a zero value (the sum of an empty input), while the min combine function returns

a maximal or infinite value.

To have Combine instead return an empty PCollection if the input is empty,

specify .withoutDefaults when you apply your Combine transform, as in the

following code example:

func returnSideOrDefault(d float64, iter func(*float64) bool) float64 {

var c float64

if iter(&c) {

// Side input has a value, so return it.

return c

}

// Otherwise, return the default

return d

}

func init() { register.Function2x1(returnSideOrDefault) }

func globallyAverageWithDefault(s beam.Scope, ints beam.PCollection) beam.PCollection {

// Setting combine defaults has requires no helper function in the Go SDK.

average := beam.Combine(s, &averageFn{}, ints)

// To add a default value:

defaultValue := beam.Create(s, float64(0))

return beam.ParDo(s, returnSideOrDefault, defaultValue, beam.SideInput{Input: average})

}const pcoll = root.apply(

beam.create([

{ player: "alice", accuracy: 1.0 },

{ player: "bob", accuracy: 0.99 },

{ player: "eve", accuracy: 0.5 },

{ player: "eve", accuracy: 0.25 },

])

);

const result = pcoll.apply(

beam

.groupGlobally()

.combining("accuracy", combiners.mean, "mean")

.combining("accuracy", combiners.max, "max")

);

const expected = [{ max: 1.0, mean: 0.685 }];4.2.4.5. Combine and non-global windowing

If your PCollection uses any non-global windowing function, Beam does not

provide the default behavior. You must specify one of the following options when

applying Combine:

- Specify

.withoutDefaults, where windows that are empty in the inputPCollectionwill likewise be empty in the output collection. - Specify

.asSingletonView, in which the output is immediately converted to aPCollectionView, which will provide a default value for each empty window when used as a side input. You’ll generally only need to use this option if the result of your pipeline’sCombineis to be used as a side input later in the pipeline.

If your PCollection uses any non-global windowing function, the Beam Go SDK

behaves the same way as with global windowing. Windows that are empty in the input

PCollection will likewise be empty in the output collection.

4.2.4.6. Combining values in a keyed PCollection

After creating a keyed PCollection (for example, by using a GroupByKey

transform), a common pattern is to combine the collection of values associated

with each key into a single, merged value. Drawing on the previous example from

GroupByKey, a key-grouped PCollection called groupedWords looks like this:

cat, [1,5,9]

dog, [5,2]

and, [1,2,6]

jump, [3]

tree, [2]

...

In the above PCollection, each element has a string key (for example, “cat”)

and an iterable of integers for its value (in the first element, containing [1,

5, 9]). If our pipeline’s next processing step combines the values (rather than

considering them individually), you can combine the iterable of integers to

create a single, merged value to be paired with each key. This pattern of a

GroupByKey followed by merging the collection of values is equivalent to

Beam’s Combine PerKey transform. The combine function you supply to Combine

PerKey must be an associative reduction function or a

subclass of CombineFn.

// PCollection is grouped by key and the Double values associated with each key are combined into a Double.

PCollection<KV<String, Double>> salesRecords = ...;

PCollection<KV<String, Double>> totalSalesPerPerson =

salesRecords.apply(Combine.<String, Double, Double>perKey(

new Sum.SumDoubleFn()));

// The combined value is of a different type than the original collection of values per key. PCollection has

// keys of type String and values of type Integer, and the combined value is a Double.

PCollection<KV<String, Integer>> playerAccuracy = ...;

PCollection<KV<String, Double>> avgAccuracyPerPlayer =

playerAccuracy.apply(Combine.<String, Integer, Double>perKey(

new MeanInts())));const pcoll = root.apply(

beam.create([

{ player: "alice", accuracy: 1.0 },

{ player: "bob", accuracy: 0.99 },

{ player: "eve", accuracy: 0.5 },

{ player: "eve", accuracy: 0.25 },

])

);

const result = pcoll.apply(

beam

.groupBy("player")

.combining("accuracy", combiners.mean, "mean")

.combining("accuracy", combiners.max, "max")

);

const expected = [

{ player: "alice", mean: 1.0, max: 1.0 },

{ player: "bob", mean: 0.99, max: 0.99 },

{ player: "eve", mean: 0.375, max: 0.5 },

];4.2.5. Flatten

Flatten

Flatten

Flatten

Flatten

is a Beam transform for PCollection objects that store the same data type.

Flatten merges multiple PCollection objects into a single logical

PCollection.

The following example shows how to apply a Flatten transform to merge multiple

PCollection objects.

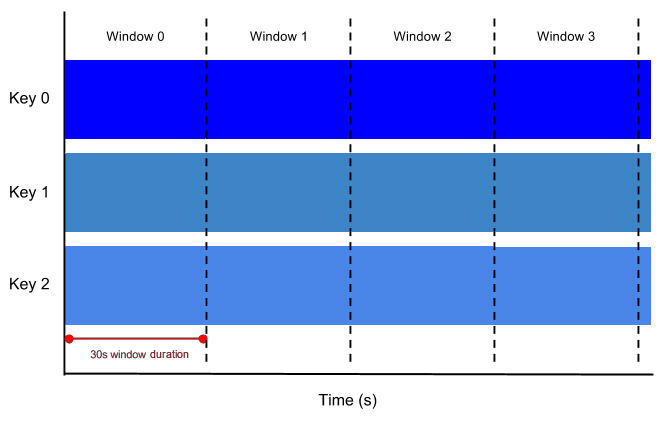

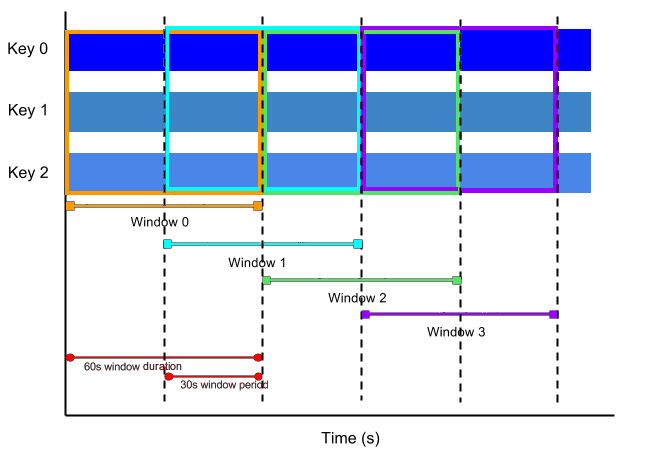

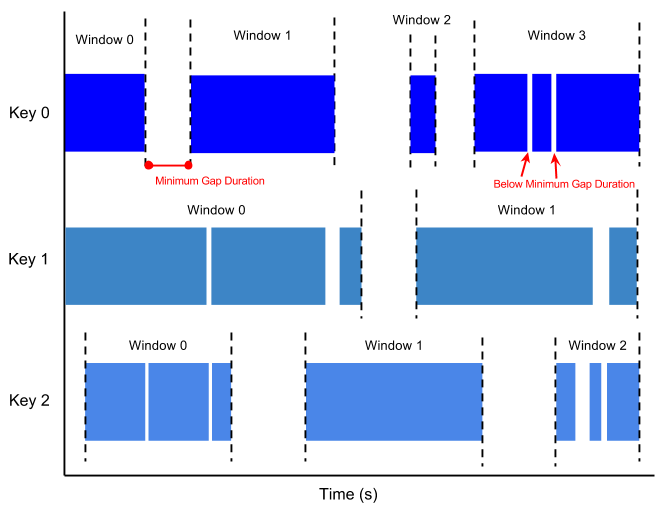

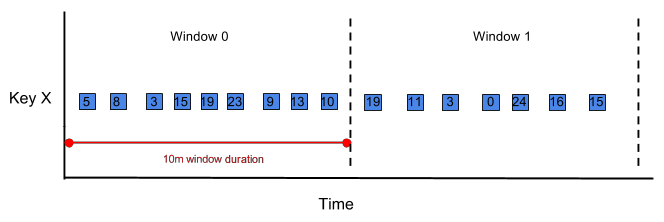

// Flatten takes a PCollectionList of PCollection objects of a given type.